Cerebras at $250M

Jan-Erik Asplund

Jan-Erik Asplund

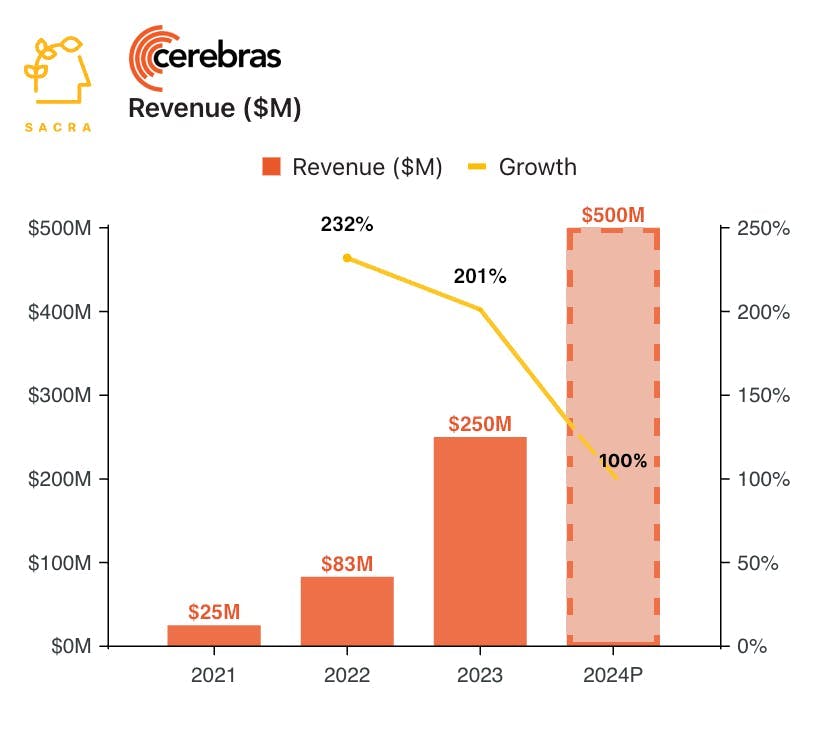

TL;DR: Sacra estimates that Cerebras generated $250M of revenue in 2023, up 200% year-over-year, their growth accelerating with the rise in AI compute demand from big enterprises training models on their proprietary data. For more, check out our full report and dataset on Cerebras.

Key points via Sacra AI:

- Cerebras (2015) designed its Wafer-Scale Engine chip to vastly accelerate training on the compute-intensive BERT models used in scientific deep learning, finding product-market fit with national labs like Argonne and Livermore working on protein folding, climate prediction, and molecular dynamics. Previously, training large-scale deep learning models like ImageNet required massive clusters of 100s of graphics processing units (GPUs)—Cerebras' design integrating thousands of cores onto a single chip reduced training time from ~2 weeks to 18 minutes, but at $2M per chip, their market was largely limited to state-backed research labs.

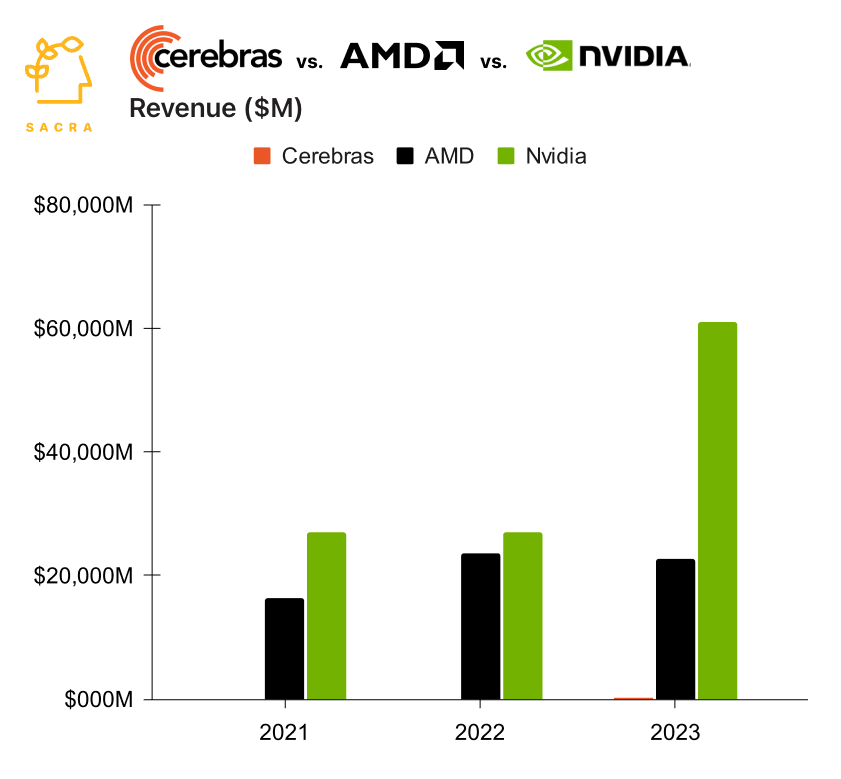

- Cerebras grew to $250M in revenue in 2023 (up 200%) with growing demand from large enterprises like pharmaceuticals companies like GlaxoSmithKline (NYSE: GSK), AstraZeneca (NASDAQ: AZN), and energy companies like TotalEnergies (NYSE: TTE) to train models for drug discovery and seismic modeling. Compare to fellow AI chip startup Groq, which has projected $100M in revenue for 2024 (up 9,560%), Nvidia (NASDAQ: NVDA) at $60.9B of revenue in 2023, up 126%, and AMD (NASDAQ: AMD) at $22.7B of revenue, down 4% year-over-year.

- With GPTs ascendant (vs BERT models), Cerebras and Groq are both repurposing their chips to move into the $150B and growing market of LLM and AI model training to take on Nvidia. Their challenge will be building a software and hardware ecosystem around their chips—they’re going up against CUDA, Nvidia’s collection of hardware-coupled libraries, compilers, and development tools that is relied upon by ~80% of all AI engineers today.

For more, check out this other research from our platform: