Runway vs. OpenAI

Jan-Erik Asplund

Jan-Erik Asplund

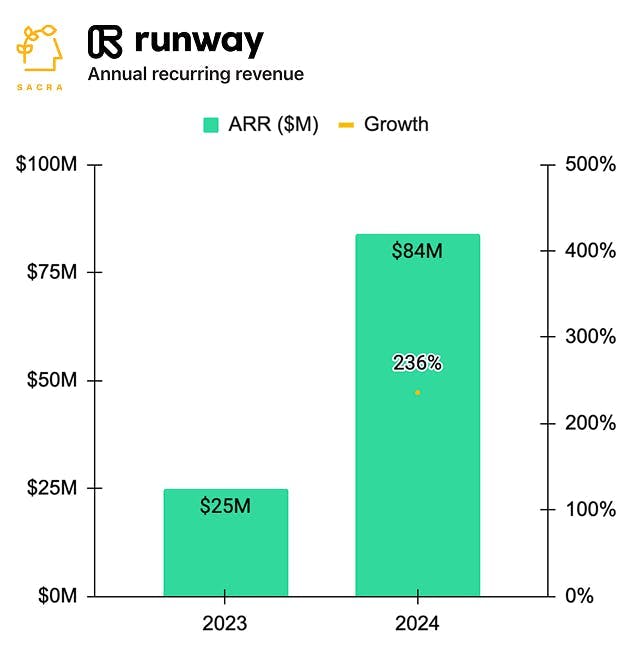

TL;DR: Founded in 2018, Runway’s AI-powered automations help filmmakers and VFX teams cut their video editing costs from $350 to ~$10 per shot. With the 2024 launch of its Gen-3 model, Runway grew to a Sacra-estimated $84M ARR in 2024, up 236% YoY. For more, check out our full report and dataset on Runway.

Key points from our report:

- Founded in 2018, Runway’s first product was ML Lab, a playground for artists to test open-source ML models on their images and videos—leading into the development of its own Gen-* foundation models and web-based video editor (2022) with ~35 AI tools that VFX teams use to reduce tedious tasks like rotoscoping from 6+ hours per shot to ~10 minutes. Where incumbent editing tools like Foundry’s Nuke help teams of 30-100+ VFX artists make lots of fine-grained edits and charge $5,000-$10,000/seat per year upfront, Runway’s AI-powered automations help small teams (like Everything Everywhere All At Once’s 6 VFX artists) repeat the same edits across many frames, monetizing through a tiered subscription model (from $12-$76/month, roughly $1 for each 10 seconds of video generated) that reduces the overall cost per shot from ~$350 to ~$10.

- The 2024 launch of Runway’s Gen-3 model, going beyond text-to-video, inpainting, green screen and rotoscoping to directing camera movement, expanding frames, and generating characters that are consistent from scene to scene, drove a surge of demand from independent filmmakers, with Sacra estimating that Runway hit $84M ARR in 2024, up 236% YoY from $25M at the end of 2023. Compare to generative AI startups like ElevenLabs at $90M ARR in November 2024, up 260% since the end of 2023, with a $3.3B valuation for a 37x multiple, and AI video editing platform OpusClip at $20M ARR in March 2025, up 150% YoY, with a $215M valuation for a 10.75x forward revenue multiple.

- Unlike foundational model companies that bundle text-to-video into their horizontal AI consumer products like OpenAI (Sora in ChatGPT) & Google (Veo in Gemini), and product companies like Pika & OpusClip that wrap open-source models to tackle highly specific jobs like adding filters to videos (Pika) or creating social media clips (OpusClip), Runway stands apart with its vertically integrated approach of a video production specific foundation model with the workflows and tooling filmmakers need. In September 2024, after years of training their models on YouTube videos, Runway inked a deal with Lionsgate to train a model on their 20,000 titles—giving Runway access to a “Hollywood-grade” video dataset and giving Lionsgate the legal air cover to accelerate its use of AI in preproduction (storyboarding, drafting scenes) and postproduction (creating backgrounds and special effects)—with the upside of potentially licensing the model out to other studios to use in the future.

For more, check out this other research from our platform:

- Runway (dataset)

- Cristóbal Valenzuela, CEO of Runway, on the state of generative AI in video

- Cristóbal Valenzuela, CEO of Runway, on rethinking the primitives of video

- AI-native SoundCloud

- Suno (dataset)

- OpenArt at $12M ARR growing 1,100% YoY

- OpenArt (dataset)

- Coco Mao, CEO of OpenArt, on building the TikTok for AI video

- Canva (dataset)

- Figma (dataset)

- Miro (dataset)