$16M ARR Amplitude for AI code quality

Jan-Erik Asplund

Jan-Erik Asplund

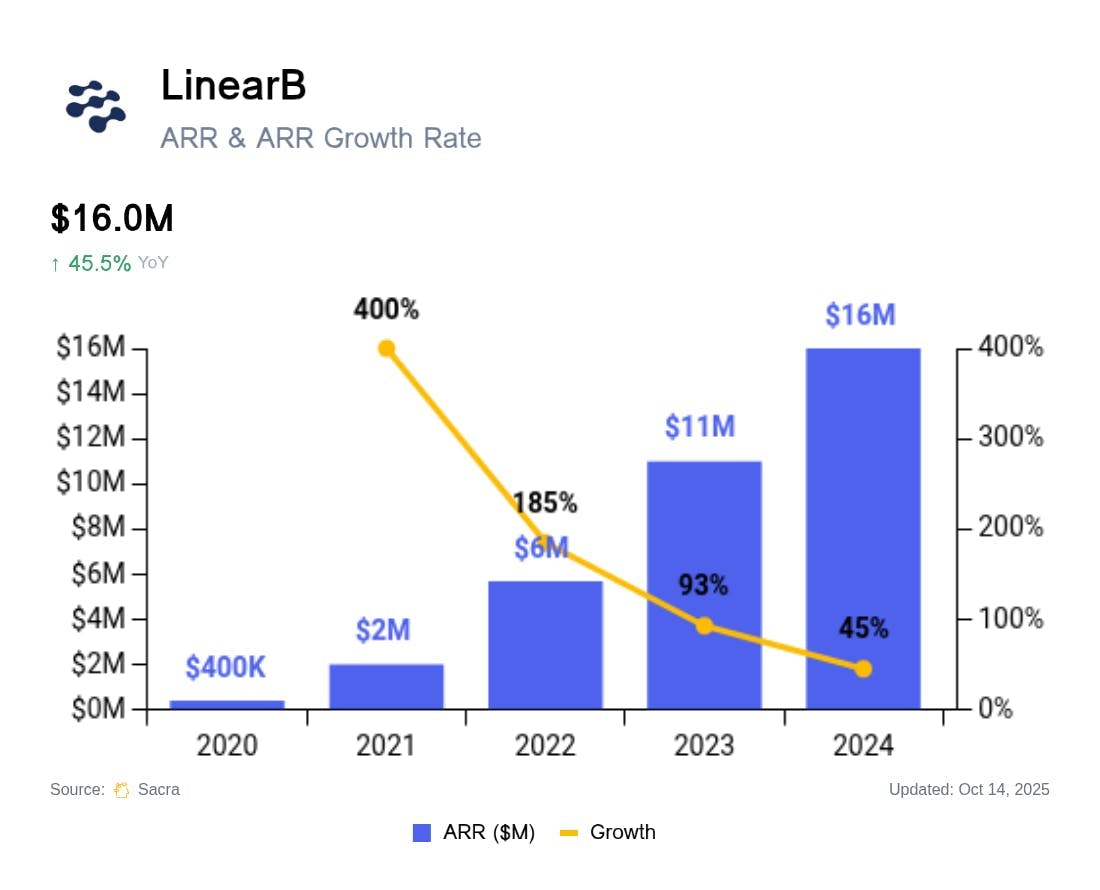

TL;DR: With AI coding tools like Cursor promising to 100x developer productivity, a new market for AI-native engineering analytics has opened up as every company looks to track AI coding’s impact on code velocity and quality. Sacra estimates LinearB hit $16M in annual recurring revenue (ARR) in 2024, up 45% year-over-year from $11M in 2023. For more, check out our full report and dataset.

Key points via Sacra AI:

- In the 2010s, the takeoff of cloud computing & continuous deployment elevated the importance of software velocity, giving rise to frameworks like DORA (popularized by Google) that measured 1) deployment frequency, 2) lead time for changes, 3) time to recovery, and 4) change failure rate—and inspiring LinearB (2018) to productize these metrics into automated dashboards populated with data ingested from GitHub (code velocity) to Jenkins (deployments), CircleCI (CI/CD), and PagerDuty (incident response time). Selling primarily into mid-market companies and startups, LinearB charges ~$30/month per developer on a team for dashboards while layering on usage-based pricing for automations like AI code review & automated pull requests, while a more nascent enterprise business built on custom dev tools integrations drives $100K–$300K contracts.

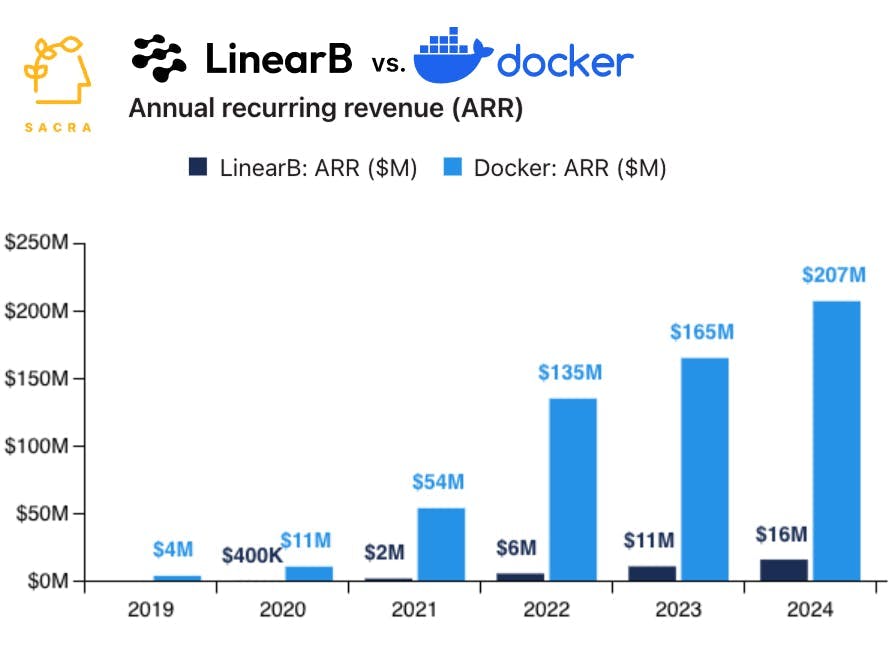

- With AI coding tools like Cursor ($500M on ARR in May 2025) promising to 100x developer productivity, AI transformation in engineering teams became an incredibly important topic, creating demand for dashboards to track AI coding tools’ impact on code velocity and quality, with Sacra estimating that LinearB hit $16M in annual recurring revenue (ARR) in 2024, up 45% year-over-year from $11M in 2023. Compare to developer incident response company PagerDuty (NYSE: PD) at $484M TTM revenue (up 8.2% YoY) valued at $1.5B for a 3.0x multiple, cloud observability platform Datadog (NASDAQ: DDOG) at $3.02B TTM revenue (up 26% YoY) valued at $56B for a 18.5x multiple, and DevOps platform GitLab (NASDAQ: GTLB) at $858M TTM revenue (up 29% YoY) valued at $7.46B for a 8.7x multiple.

- As pre-AI engineering productivity companies like Jellyfish ($114M raised, Accel) and LinearB ($71M raised, Tribe Capital) have refashioned themselves as bundled dashboard and workflow tools with AI PR review and AI code policy controls, a new cohort of AI-native engineering productivity startups like Weave ($4.2M raised, Moonfire & Burst Capital) and Span ($1M raised, Alt Capital) are building dashboards around AI coding metrics like “lines of code written by AI”. As software engineering shifts from developers writing code by hand in an IDE to orchestrating agents through a chat CLI, coding tools like Claude Code and Cursor are bundling in features like AI code review & automatic security analysis that cross over into DevOps.

For more, check out this other research from our platform:

- LinearB (dataset)

- Supabase (dataset)

- Replit (dataset)

- Vercel (dataset)

- $172M/year Heroku of vibe coding

- Vibe coding index

- Why OpenAI wants Windsurf

- Lovable vs Bolt.new vs Cursor

- Bolt.new at $40M ARR

- Claude Code vs. Cursor

- Cursor at $200M ARR

- Cursor at $100M ARR

- Lovable (dataset)

- Bolt.new (dataset)

- Anthropic (dataset)

- OpenAI (dataset)

- Scale (dataset)

- Cursor (dataset)