Revenue

$1.90B

2024

Valuation

$23.00B

2024

Growth Rate (y/y)

730%

2024

Funding

$3.50B

2024

Revenue

Sacra estimates that CoreWeave hit $1.9B in revenue in 2024, up 730% YoY from $229M in 2023, off the rapid acceleration of demand for GPU compute from cloud providers, LLM companies, and every app looking to integrate generative AI features. CoreWeave projects $8B of revenue in 2025.

$10B of its $17B in booked contracts came from Microsoft, which agreed on a multi-year deal for CoreWeave to supply it with GPU compute amid rising demand from Azure cloud customers that Microsoft has not been able to meet.

In 2024, the company reported a net loss of $863M, compared to a net loss of $594M in 2023.

Valuation

CoreWeave is valued at $23 billion as of 2024, following a secondary share sale with participation from Jane Street, Fidelity Management, and BlackRock.

Based on their 2024 revenue data, CoreWeave has a revenue multiple of 12x, derived from $1.9B in revenue against that $23B valuation.

The company has raised over $12.7B in total equity and debt financing. Notable investors include Nvidia as a strategic partner, while Coatue led their $1.1B Series C round. Additional key investors include Magnetar, Macquarie Capital, and Pure Storage, with the latter also serving as a strategic technology partner.

Product

CoreWeave was founded in 2017 as Atlantic Crypto, an Ethereum mining company that bought Nvidia graphics processing units (GPUs) both to mine its own crypto and rent out GPU servers to other crypto miners. In early 2019, Atlantic Crypto changed its name to CoreWeave and pivoted to providing GPUs-on-demand for generalized computing purposes rather than focusing on crypto.

Through this period, CoreWeave built infrastructure for delivering that GPU compute across seven global facilities—positioning them well for the flood of demand for GPU compute that arrived in 2022 with the generative AI boom.

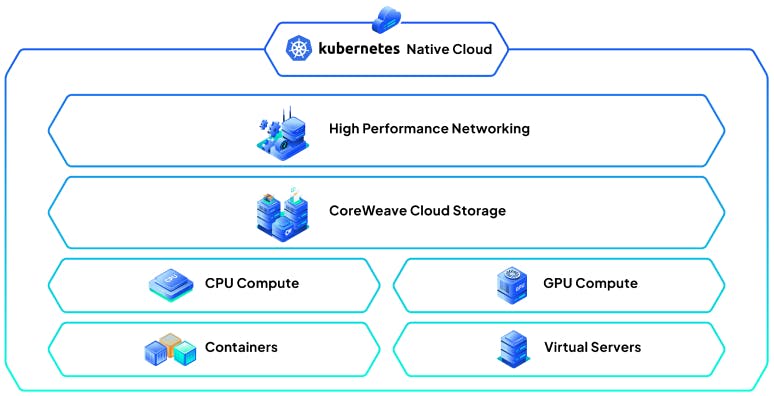

Today, CoreWeave is fundamentally a GPU-first cloud platform that lets developers and business access compute remotely the same way they would with Amazon Web Services or Azure.

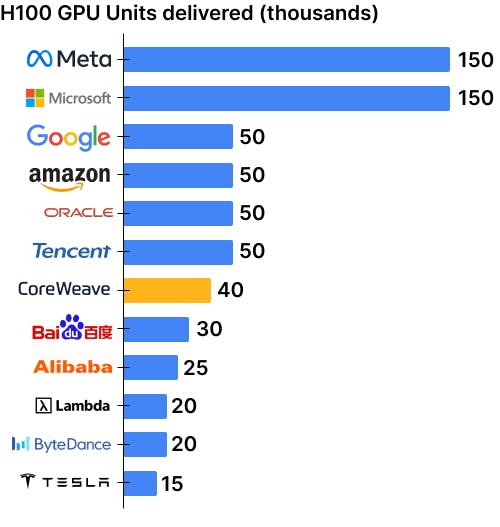

What differentiates CoreWeave is their far greater availability of the high-end GPUs that are designed for training and running large, complex, AI workloads. With 45,000 GPUS, CoreWeave is the largest private provider of GPUs in North America. In early 2023, CoreWeave was one of the first cloud providers to offer access to the new Nvidia H100 Tensor Core GPUs on its platform.

While AWS and Amazon customers report resources shortages on their cloud platforms, CoreWeave's most favored nation relationship with Nvidia has allowed them to both scale faster to meet demand and offer higher-powered GPUs.

For the AI text adventure game AI Dungeon, which is based on GPT-2, serving 1.6M users of their game drove response times up and cost too much to continue running the product on AWS's Cortex GPU compute platform. Switching to Tesla V100 GPUs delivered via CoreWeave's cloud cut AI Dungeon's response time down by 50%.

Business Model

CoreWeave, like other cloud providers, operates on a model where it rents out computing resources (such as GPU power) to businesses and developers.

CoreWeave's ~85% gross margins come from the difference between the cost of maintaining these resources (including the initial investment in hardware, ongoing electricity, cooling, maintenance, and support staff costs) and the revenue generated from customers paying to use these resources.

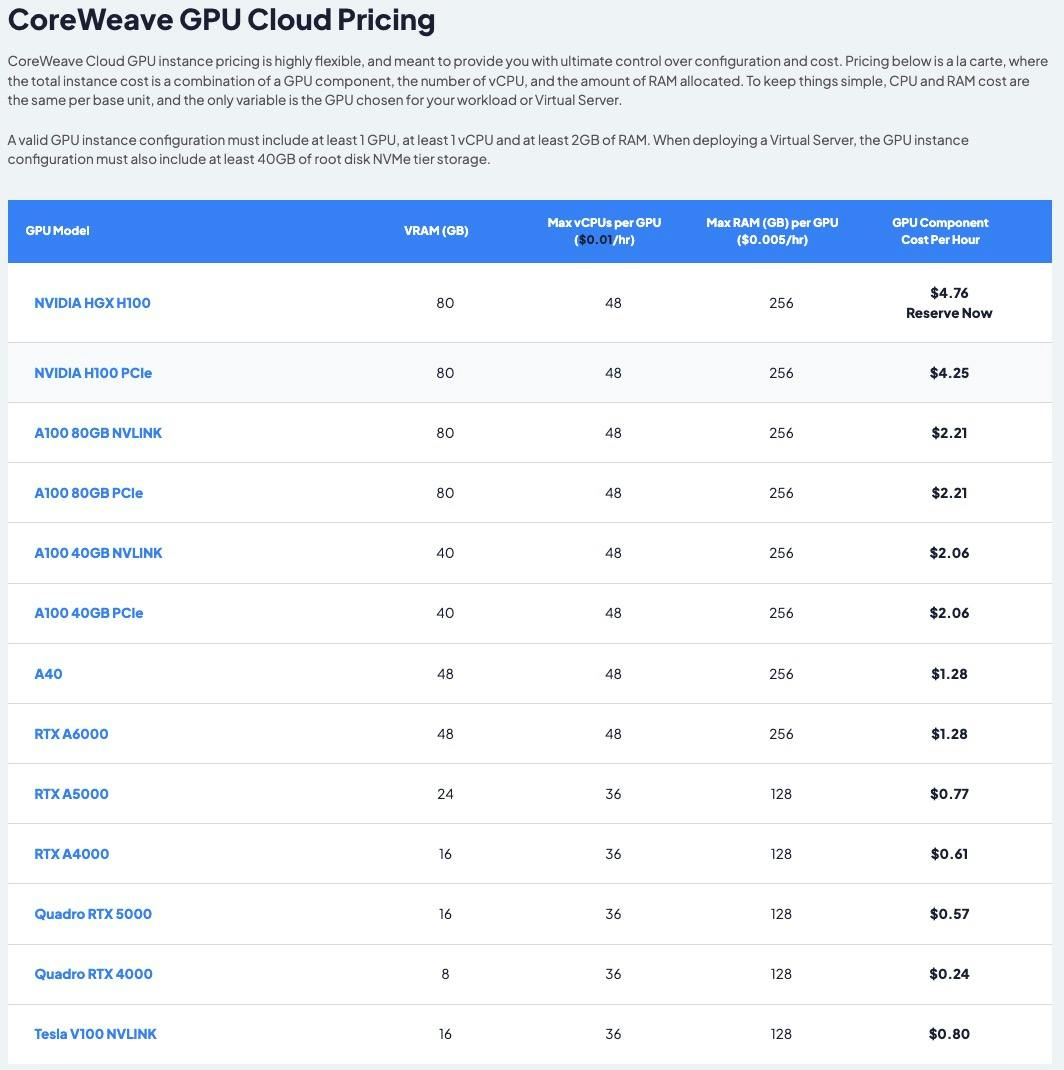

Customers pay CoreWeave for the computing power they use, typically on a per-hour basis. This payment model is attractive to customers because it allows for flexible scaling of resources based on demand, and they only pay for what they use. CoreWeave sets the rental price based on market demand, the specific GPU model (newer models with better performance command higher prices), and the operational costs to ensure a profitable margin.

CoreWeave, like AWS, has an expansion motion in offering different kind of services on top of the basic product of GPU compute. So far, CoreWeave has added on specialized solutions for data storage, networking, CPU compute, each priced on a similar pay-as-you-go basis.

Expenses

CoreWeave incurs a significant upfront cost when purchasing GPUs and setting up data centers. However, these GPUs have a useful life of several years, during which CoreWeave can continually rent them out. The operational costs include electricity (GPUs are power-hungry), cooling (to prevent overheating), and staffing (for maintenance and customer support).

Improving the efficiency of data center operations (e.g., reducing electricity consumption, negotiating better rates for electricity, or improving cooling systems) can lower operational costs and thus improve margins.

Margin

The cost of a GPU for CoreWeave includes the purchase price and the operational costs over its lifespan. The revenue from a GPU is the cumulative amount paid by customers to rent the GPU over time. CoreWeave aims to maximize the utilization of each GPU to ensure that the revenue generated far exceeds the cost.

Margins are generally lowest on CoreWeave's higher-end GPUs. For example, A high-end H100 PCIe card might cost CoreWeave roughly $30,000. That GPU is then rented out at an average of $4.25 per hour. Assuming an 80% utilization rate, it would generate roughly $29,473 in revenue per year ($1/hour * 12 hours/day * 365 days/year)—roughly break-even assuming that they don't hit 100% utilization.

However, cheaper GPUs like the A40, which CoreWeave could have bought in bulk in 2021 before the generative AI boom, could generate much greater margins. At 80% utilization, an A40—which had a sticker price of $4,500 three years ago and is now rented out by CoreWeave at $1.278 per hour—could generate $8,877 in revenue every year.

Competition

The market for GPU cloud services is highly competitive, with several key players, including major cloud providers like Amazon Web Services, Google Cloud and Azure as well as upstarts like Lambda Labs and Together AI, each offering unique advantages and targeting different segments of the AI and machine learning industry.

Big Cloud

The biggest long-term competition for CoreWeave is likely to be the major three cloud providers: Google Cloud ($75B in revenue in 2023), Amazon Web Services ($80B in revenue in 2023) and Microsoft Azure ($26B in revenue in 2023). With far greater revenue scale—vs. CoreWeave's ~$465M in 2023—the big cloud platforms have the resources to invest both in acquiring GPUs and in developing their own silicon alternatives to Nvidia's GPUs.

So far, CoreWeave has been able to outmatch the biggest cloud providers on access to GPUs because they've enjoyed preferential treatment from Nvidia, which has allocated GPUs away from Amazon, Google and Microsoft and towards CoreWeave. Notably, CoreWeave is the only major cloud provider customer of Nvidia's that is not developing its own AI chips to try to compete with Nvidia, making it a good customer for Nvidia to support.

Lambda Labs

Like CoreWeave, Lambda Labs is a public cloud provider that purchases GPUs from Nvidia and rents them out to AI companies and companies building AI features. Also like CoreWeave, Lambda Labs has received generous allocations of Nvidia GPUs and was in talks with Nvidia for investment in 2023, but as of February 2024, that deal hasn't happened.

Lambda Labs is generally positioning itself as a better option for smaller companies and developers working on less intensive computational tasks, offering Nvidia H100 PCIe GPUs at a price of roughly $2.49 per hour, compared to CoreWeave at $4.25 per hour. On the other hand, Lambda Labs does not offer access to the more powerful HGX H100—$27.92 per hour for a group of 8 at CoreWeave—which is designed for maximum efficiency in large-scale AI workloads.

Lambda Labs generated about $20M in revenue in 2020 and was projecting $250M in 2023 and $600M in 2024 as of July last year. Lambda Labs is backed by Thomas Tull’s US Innovative Technology fund, B Capital, SK Telecom, Crescent Cove, Mercato Partners, 1517 Fund, Bloomberg Beta, and Gradient Ventures.

Together

Together is fundamentally a GPU reseller that rents GPUs from CoreWeave, big cloud platforms like Google Cloud, and from other sources—academic institutions, crypto miners, other companies—and then rents those GPUs out to startups and AI companies, then bundling that in with software for training and fine-tuning open source AI models like Meta's Llama 2, Midjourney's Stable Diffusion, and its own RedPajama.

TAM Expansion

To date, CoreWeave's rapidly accelerating growth has been driven by high demand for GPUs and compute, combined with low supply. CoreWeave's favored partner status with Nvidia has allowed them to offer better availability than the major cloud platforms while also undercutting them on price.

Looking forward, the key dynamics in understanding CoreWeave's durable advantage hinges on (1) the long-term state of the GPU industry, and (2) CoreWeave's ability to build a differentiated AI compute platform.

GPUs

At the root of Nvidia's GPU shortage is a limitation at TSMC—Taiwan Semiconductor Manufacturing Company. The key shortage there is on chip-on-wafer-on-substrate (CoWoS) packaging capacity, which is used by all GPUs in the manufacturing process. Currently, TSMC expects that the current shortage will last until about March 2026. TSMC recently announced a plan to build a $2.9B packaging facility that will be operational in 2027, further alleviating shortages.

The major cloud providers, as well as companies like Tesla, Meta and OpenAI, wanting to escape the dynamics of this shortage, have all begun or accelerated work on their own AI processors. That said, they're also dependent on TSMC to actually make their chips—and with Nvidia being one of TSMC's biggest and longest-term customers, Nvidia could still have an advantage on manufacturing, at least until shortages are completely alleviated.

Tech

Last year, CoreWeave reported record-breaking LLM benchmark results using Nvidia HGX H100 instances—CoreWeave's platform, composed of one of the biggest clusters of HGX GPUs in the world, came in 29x faster than the next-fastest competitor.

That's one sign that CoreWeave could compete with the major cloud providers even if the present GPU shortages come to an end. CoreWeave's infrastructure has been designed from the ground-up to serve GPU compute at scale—since 2017 when the company was working on Ethereum mining as Atlantic Crypto.

Over the last few years, AI workloads have generally been increasing in size and complexity, creating a scaling revenue opportunity for companies like CoreWeave that specialize in serving the customers with the biggest compute needs.

If CoreWeave can continue to outmatch cloud rivals on performance, then they could protect their moat even in the absence of the supply-and-demand dynamics that have powered their growth to $465M in revenue so far.

Funding Rounds

|

|

|||||||||

|

|||||||||

|

|

|||||||||

|

|||||||||

|

|

|||||||||

|

|||||||||

|

|

|||||||||

|

|||||||||

| View the source Certificate of Incorporation copy. |

News

DISCLAIMERS

This report is for information purposes only and is not to be used or considered as an offer or the solicitation of an offer to sell or to buy or subscribe for securities or other financial instruments. Nothing in this report constitutes investment, legal, accounting or tax advice or a representation that any investment or strategy is suitable or appropriate to your individual circumstances or otherwise constitutes a personal trade recommendation to you.

This research report has been prepared solely by Sacra and should not be considered a product of any person or entity that makes such report available, if any.

Information and opinions presented in the sections of the report were obtained or derived from sources Sacra believes are reliable, but Sacra makes no representation as to their accuracy or completeness. Past performance should not be taken as an indication or guarantee of future performance, and no representation or warranty, express or implied, is made regarding future performance. Information, opinions and estimates contained in this report reflect a determination at its original date of publication by Sacra and are subject to change without notice.

Sacra accepts no liability for loss arising from the use of the material presented in this report, except that this exclusion of liability does not apply to the extent that liability arises under specific statutes or regulations applicable to Sacra. Sacra may have issued, and may in the future issue, other reports that are inconsistent with, and reach different conclusions from, the information presented in this report. Those reports reflect different assumptions, views and analytical methods of the analysts who prepared them and Sacra is under no obligation to ensure that such other reports are brought to the attention of any recipient of this report.

All rights reserved. All material presented in this report, unless specifically indicated otherwise is under copyright to Sacra. Sacra reserves any and all intellectual property rights in the report. All trademarks, service marks and logos used in this report are trademarks or service marks or registered trademarks or service marks of Sacra. Any modification, copying, displaying, distributing, transmitting, publishing, licensing, creating derivative works from, or selling any report is strictly prohibited. None of the material, nor its content, nor any copy of it, may be altered in any way, transmitted to, copied or distributed to any other party, without the prior express written permission of Sacra. Any unauthorized duplication, redistribution or disclosure of this report will result in prosecution.